Get started - The basics

Our basic concepts and entities for data transformation

What is DataMorf?

Datamorf is a user-friendly platform that helps you process and transform your data easily. It has different components like triggers, computations, and destinations that work together to automate data processing workflows. Triggers are like entry points for data, computations are instructions to transform data, and destinations are where the processed data is sent. You can create workflows by connecting triggers, computations, and destinations to achieve your data processing goals. With Datamorf, you can process data without writing complex code, making it accessible and intuitive for users of all skill levels.

Entities

Datamorf has three main entities that work together to process your data and send it to your desired destination: computations, destinations and workflows.

To get started, let's add a destination. Destinations are where the resulting data from the transformation will be sent. This can be another webhook endpoint that will receive the computed data. To create a destination, go to the left side menu, click on Destinations, and add a new one. Give it a name and a destination endpoint, which is the webhook URL where you want the resulting data to be sent. You may have a Rudderstack or Fivetran endpoint collecting events.

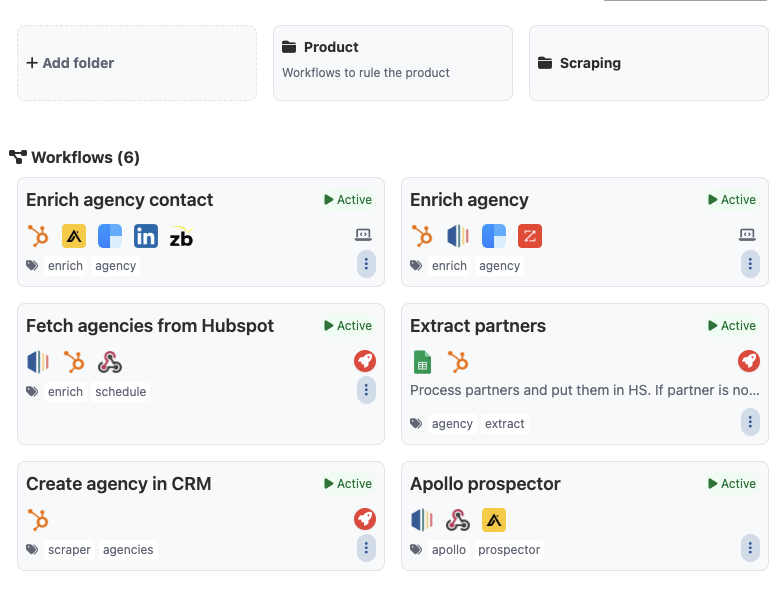

Finally, let's create a workflow. Workflows are a set of triggers, computations, and destinations. When a trigger is activated, the workflow will start, which will run all computations and send the results to the defined destinations. Each workflow accepts multiple triggers, computations, and destinations at once. So, on the left side menu, click on Workflows and add a new one. Give it a name and tweak the initial settings (just informative, nothing critical).

Creating a workflow

Now, let's dive into each entity:

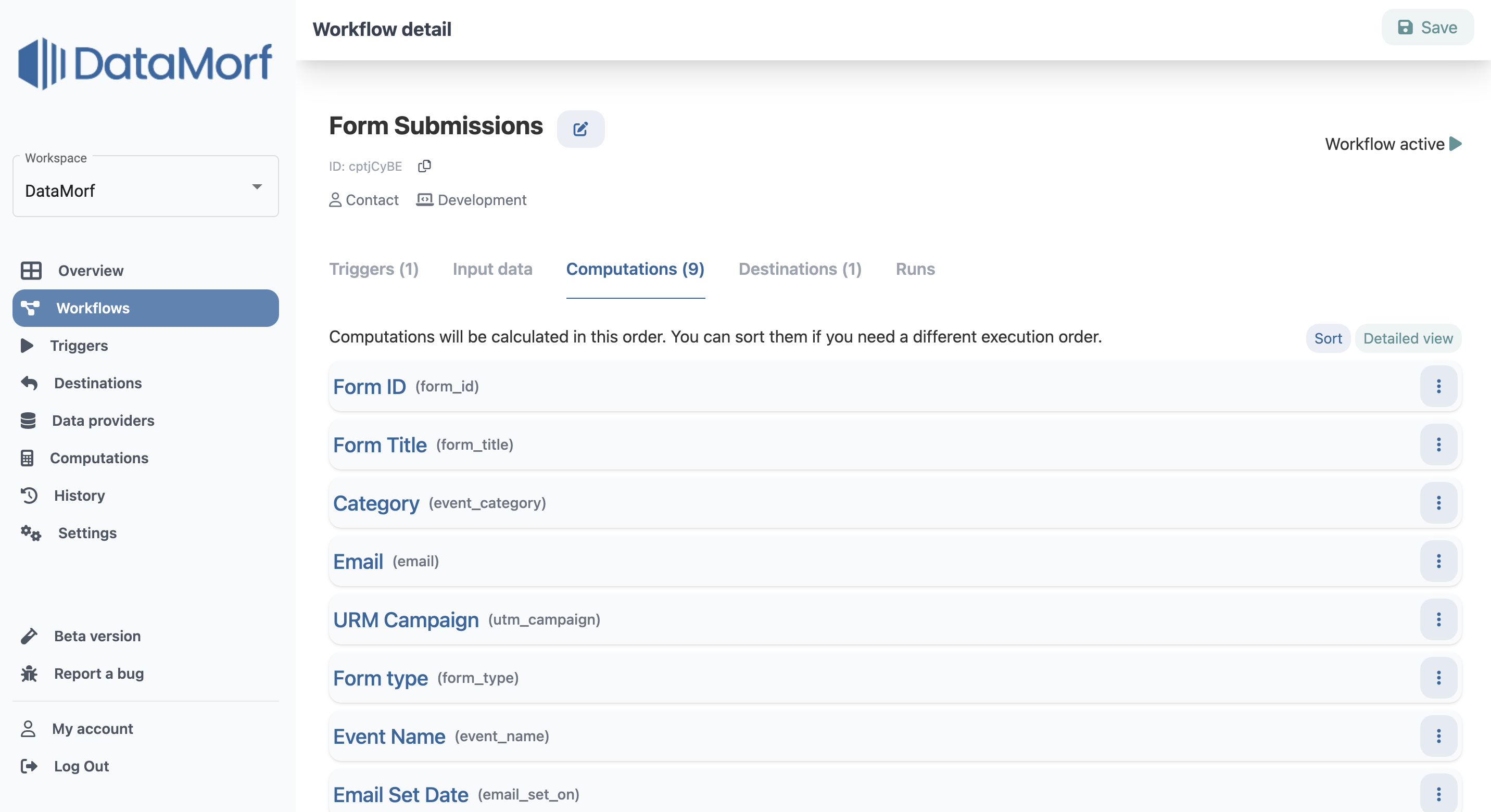

- Computations: this is where the transforming magic happens! Add as many computations as you need. Each item requires a name (a readable name for you and your team), output name (property name in the resulting JSON object), and computation mode. The mode defines how to handle the datapoint and how to transform it. Some computations accept multiple inputs, and some only one. The configuration option will also adapt to the needs of each mode. You can check all our computation modes in our reference "computations" on the left side menu.

- Destinations: add a new one and select the one we just created, but also add the pre-defined destination called "respond to webhook." That means that the data will be sent back to the endpoint that activated the trigger. When adding a destination to a workflow, you will be able to select which objects you want to send to this specific destination. To keep things simple now, we will enable only "computed data".

- Runs: here we show a history of historic workflow executions. You can analyze the data received and each computation and enrichment result. We store workflow results for a specific number of days, but if you need it adjusted, please don't hesitate to contact us.

At this point we have a fully functional workflow that will be executed whenever a payload is received by our trigger.

Conclusion

We hope this guide has helped you understand the different components of Datamorf and how to create your first workflow. Our platform is designed to make data processing and transformation as easy as possible, so you can focus on making the most of your data. If you have any questions or need any assistance, please don't hesitate to reach out to our support team. We are always here to help you get the most out of your data with Datamorf.

Get a free consultation with us here:

Get free consultation

Ready to get started?

Join now and get afree consultancyand extended features on the free plan - sign up now and start exploring!